The Amazing Guide: 7 Steps to Write Assignments with ChatGPT, Positive use of AI

Feeling buried under a mountain of homework? The pressure to complete essays, reports, and research papers on tight deadlines is a struggle every student knows well. For many, the long hours of research and writing can be overwhelming. But what if you had a powerful assistant that could help you brainstorm ideas, structure your thoughts, and polish your work in a fraction of the time? That assistant is here, and its name is ChatGPT. Using AI is now a key skill, and knowing how to properly write assignments with ChatGPT can be the ultimate academic game-changer.

This is not a guide about cheating. This is a guide about working smarter. Simply asking an AI to “write an essay for me” is not only unethical but also produces low-quality work that teachers can easily spot. The real secret is using ChatGPT as a tool to enhance your own thinking, not replace it.

This in-depth guide will walk you through seven essential steps to write assignments with ChatGPT the right way. We’ll cover everything from creating powerful outlines and writing the perfect prompts to simplifying complex topics and, most importantly, ensuring your final work is 100% original and in your own voice.

Before You Start: The Golden Rule of Using ChatGPT Ethically

Before we dive into the “how-to,” we must establish one non-negotiable rule: ChatGPT is your assistant, not your author. The goal is to use it for support, not to have it do the work for you. Submitting AI-generated text as your own is plagiarism, period. The best way to write assignments with ChatGPT is to use it as a brainstorming partner and a super-smart editor, while ensuring the final words, ideas, and arguments are entirely your own. Think of it like a calculator for math—it helps with the process, but you still need to understand the problem.

Step 1: Brainstorming Ideas and Unlocking Creativity

Every great assignment starts with a great idea, but staring at a blank page can be intimidating. This is where your AI assignment writer shines as a creative partner. Instead of feeling stuck, you can use ChatGPT to explore different angles for your topic and break through mental blocks.

Let’s say your assignment is about the impacts of social media. A weak prompt would be “give me ideas.” A strong prompt would be:

“Act as a university sociologist. I need to write a 10-page paper on the impacts of social media. Brainstorm five unique and specific essay topics for me. For each topic, suggest a potential argument, a counter-argument, and a real-world example.”

This command instantly gives you structured, high-quality starting points. It helps you move from a broad topic to a focused thesis statement, which is the foundation of any good assignment. Using this method to write assignments with ChatGPT ensures your work is original from the very beginning.

Step 2: Creating a Powerful Outline in Minutes

Once you have your topic, the next step is creating a logical structure. A well-organized outline is the key to a coherent and persuasive assignment, but building one can be time-consuming. ChatGPT can create a detailed outline for you in seconds, giving you a clear roadmap for your writing.

Continuing with our example, you could prompt:

“Create a detailed 5-section outline for an essay with the argument that ‘while social media connects us globally, it negatively impacts mental health through social comparison.’ Include bullet points for key evidence to include in each section.”

The AI will generate a structured outline with an introduction, body paragraphs with specific points, and a conclusion. This is one of the most effective ways to write assignments with ChatGPT because it handles the organizational heavy lifting, allowing you to focus on filling in the details with your own research and analysis.

Step 3: Drafting Your First Version (The Right Way)

This is where many students make a mistake. You should never ask ChatGPT to write the full draft for you. Instead, you write the draft yourself, using the AI to help you with specific, difficult parts. Think of it as co-writing, where you are the lead author.

If you get stuck on a paragraph, you can ask for help. For example:

“I’m writing a paragraph about the concept of ‘social comparison’ on Instagram. Can you explain this concept in three simple sentences and provide a scholarly definition I can cite?”

You then take that explanation, rewrite it in your own words, and integrate it into your paragraph. This approach ensures the writing style remains yours. When you write assignments with ChatGPT this way, you maintain full control and ownership of the work, using the AI as a very knowledgeable research assistant.

Step 4: Simplifying Complex Topics and Research

Is there a difficult theory or dense research paper you can’t seem to understand? ChatGPT is an incredible tool for breaking down complex information into simple, easy-to-digest explanations. You can copy and paste a confusing paragraph or ask about a specific concept.

A powerful prompt would be:

“Explain the ‘echo chamber effect’ as if you were talking to a high school student. Use a simple analogy to make it easy to understand.”

This ability to simplify is like having a patient tutor available 24/7. It can save you hours of struggling through dense academic texts. This is a perfectly ethical and highly effective strategy when you write assignments with ChatGPT, as your goal is to understand the material better so you can explain it yourself.

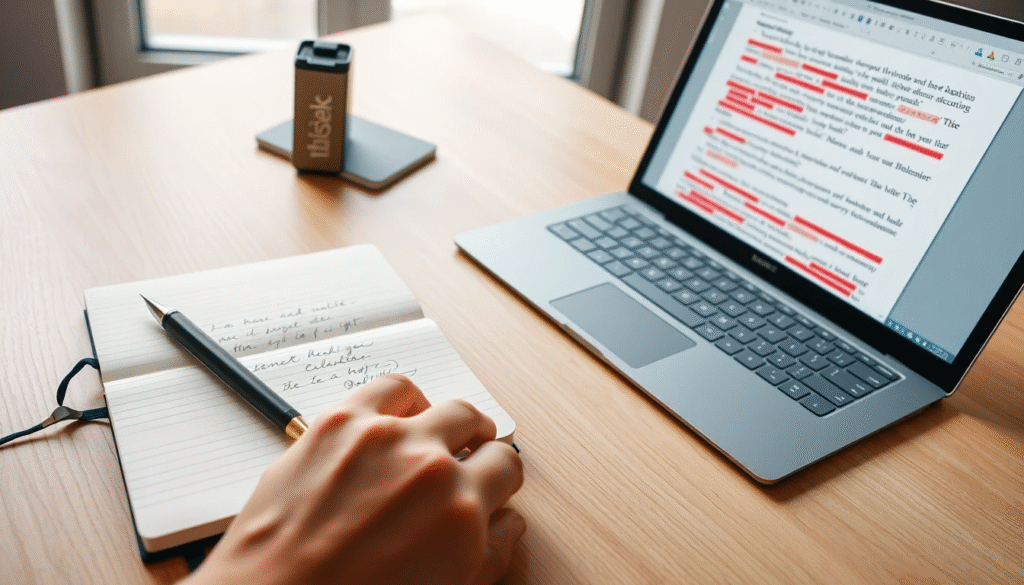

Step 5: Improving and Editing Your Draft with AI

Once your first draft is complete, ChatGPT becomes your personal editor. It can help you improve clarity, refine your tone, and fix grammatical errors. You can copy and paste your own writing into the AI and ask for specific feedback.

Here are some editing prompts to try:

- “Review this paragraph and suggest ways to make it more persuasive.”

- “Check this text for any grammatical errors or awkward phrasing.”

- “Can you rephrase this sentence to sound more academic?”

Using an AI to polish your own work is no different than using Grammarly or the spell-checker in Microsoft Word. This is a crucial step to write assignments with ChatGPT professionally, as it helps you turn a good draft into a great one.

Step 6: Writing the Perfect Prompts for Your Assignments

The quality of the AI’s output depends entirely on the quality of your input. Learning basic prompt engineering is the key to unlocking ChatGPT’s full potential. A great prompt always includes three things:

- Role: Tell the AI who to be (e.g., “Act as a history professor,” “Act as a professional editor”).

- Context: Give it the background information it needs to understand your request.

- Task: Tell it exactly what you want it to do in a clear, step-by-step manner.

Mastering this simple formula will dramatically improve the results you get. It’s a fundamental skill you need to effectively write assignments with ChatGPT.

Step 7: The Final, Non-Negotiable Plagiarism Check

Even if you use ChatGPT ethically and write everything in your own words, it’s still a good idea to run your work through a plagiarism checker. This is your final safety net to ensure your assignment is completely original. Many universities have their own tools (like Turnitin), but you can also use free online checkers.

This step protects you and reinforces good academic practice. It’s the final stamp of approval after you write assignments with ChatGPT, confirming that the work is truly yours. For more information, you can read our other posts on academic integrity. To check the authenticity please check plagrism best reaching to plagrism checker sites.

Note: All the provided data which is shared educational purpose only and user will be responsible of any misuse.

Conclusion

In today’s academic world, knowing how to write assignments with ChatGPT is a powerful skill. It’s not about finding shortcuts to avoid work; it’s about leveraging a revolutionary tool to enhance your learning process. By using ChatGPT as a brainstorming partner, an organizational assistant, and a smart editor, you can produce higher-quality work in less time. Remember the golden rule: AI should be your assistant, not your author. Master these seven steps, and you’ll be well on your way to studying smarter and achieving academic success.

Frequently Asked Questions

Is it cheating to write assignments with ChatGPT?

It is cheating if you copy and paste the AI’s work. It is not cheating if you use it as a tool for brainstorming, outlining, and editing your own original writing.

How can I avoid plagiarism when using ChatGPT?

Always write the final draft in your own words. Use ChatGPT for ideas and explanations, but never submit its text directly. Always run a plagiarism check before submitting.

What is the best way to ask ChatGPT for help with homework?

Write detailed prompts. Give the AI a role (e.g., “act as a tutor”), provide context about your assignment, and give it a clear, specific task to perform.

Can ChatGPT help me with research for my assignments?

Yes, it’s excellent for summarizing long articles and explaining complex topics. However, always verify facts and find proper academic sources for your citations.

Can teachers detect if I used ChatGPT?

AI-generated text often has a robotic or generic style that experienced teachers can spot. It’s always best to use it as an assistant and write in your own unique voice.

What is the most useful feature of ChatGPT for assignments?

Its ability to brainstorm ideas and create a structured outline is one of its most powerful features. This saves a lot of time and helps organize your thoughts logically.

Can ChatGPT help me improve my writing skills?

Absolutely. You can paste your own writing and ask it to suggest improvements, fix grammar, or rephrase sentences to sound more academic, acting as a personal editor.

Should I use ChatGPT for math or science assignments?

It can be helpful for explaining concepts and showing step-by-step processes. However, always double-check calculations, as AI can sometimes make mathematical errors.

Are there alternatives to ChatGPT for writing assignments?

Yes, tools like Google’s Gemini and Claude are also excellent for writing assistance. Each has slightly different strengths, so it’s worth trying them to see which you prefer.

How do I cite information I got from ChatGPT?

You should not cite ChatGPT as a primary source. Use it to find ideas and explanations, then locate that information in a proper academic source (like a book or journal) and cite that source.